Deep learning cannot make causal reasoning, and graph model (GNN) is one of the solutions. Professor Sun Maosong of Tsinghua University published a review paper, comprehensively expounded GNN and its methods and applications, and proposed a unified representation that can characterize the propagation steps in various GNN models. In the text chart, it is recommended to print the high-definition plastic stickers for reference.

What is the biggest weakness of deep learning?

The answer to this question is benevolent, but the Turing Award winner Judea Pearl has a chance of 99.9%.It is impossible to conduct causal reasoning.

The industry is actively exploring this issue, and one of the promising directions isGraph Neural Network (GNN).

Recently, Professor Sun Maosong of Tsinghua University published a paper in arXiv. Graph Neural Networks: A Review of Methods and ApplicationsThe author has made a detailed and comprehensive review of the existing GNN model.

"Graphic neural network is an organic combination of connectionism and symbolism, which not only enables the deep learning model to be applied to the non-Euclidean structure of the graph, but also gives the deep learning model a certain causal reasoning ability." The first author Zhou said.

"Today, when the robustness and interpretability of deep learning methods are questioned,Graph neural network may provide a feasible direction for the development of artificial intelligence in the future.. "

GNN has recently received extensive attention in the field of deep learning. However, for researchers who want to quickly understand this area, they may face problems with complex models and many applications.

"This article hopes to provide readers with a higher level of perspective and a quick understanding of the motivations and advantages of different models in the GNN field." Zhou Jie told Xinzhiyuan: "At the same time, by categorizing different applications, it is convenient for researchers in different fields to quickly Learn about applying GNN to different areas of literature."

said with no exaggeration,Chart in the paperFor researchers who want to learn about GNN and even causal reasoning,It should be printed in HD and then attached to the wall for reference.-

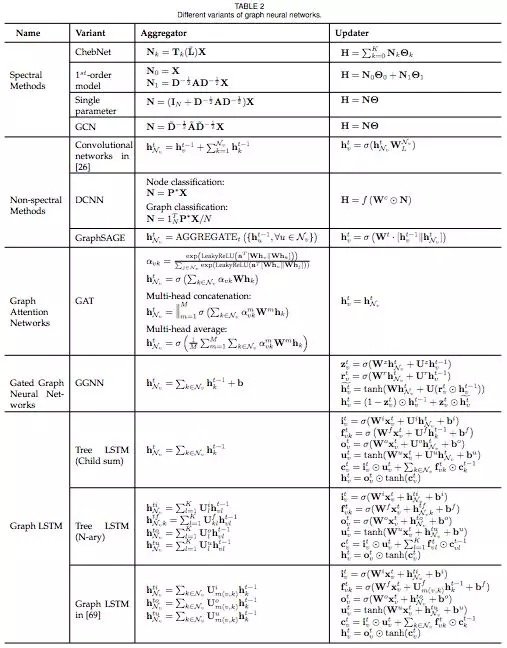

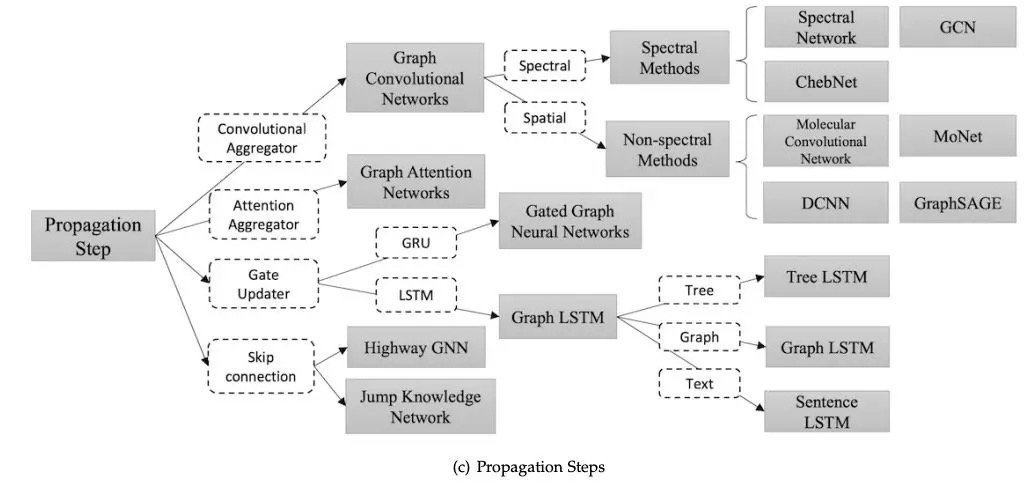

Various variants of GNN can easily distinguish different GNN models by comparing their respective aggregators & updaters.This is just one example of a powerful chart in this review.

Want to get to know GNN quickly, this article is absolutely correct.

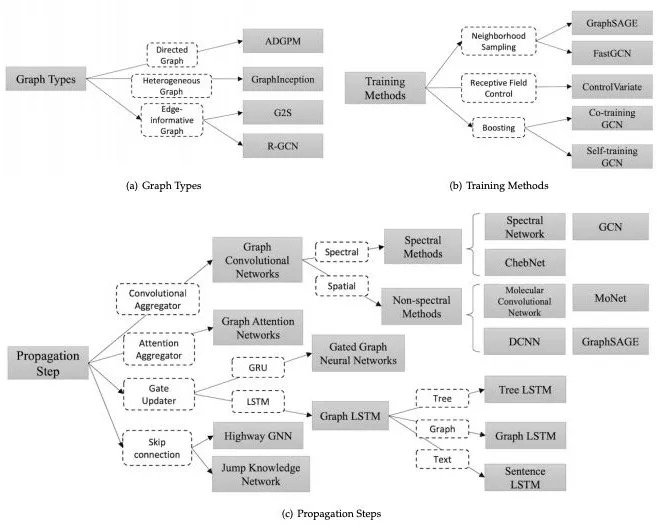

In terms of content and model, this paper introduces the GNN variants of GNN original model construction and existing problems, including how to deal with different types of graphs, how to carry out efficient information transfer and how to Speed up the training process. Finally, several general frameworks proposed in recent years are introduced. They summarize and summarize several existing methods and have strong expressive power.

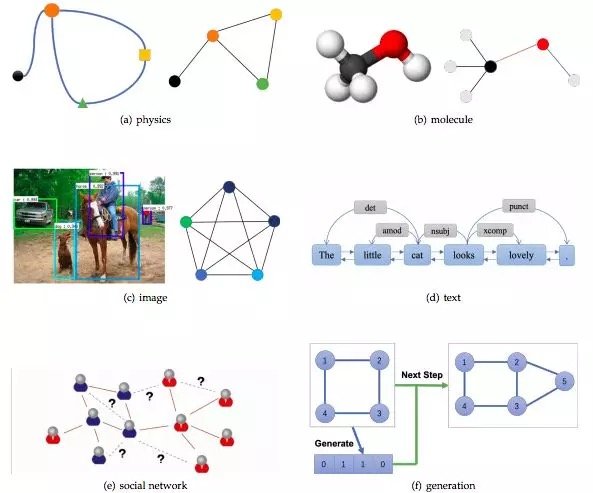

In application, the article divides GNN's application areas into structured scenes, unstructured scenes and other scenes and introduces classic GNN applications such as physics, chemistry, images, text, graph generation models, and combinatorial optimization problems.

Finally, the paper proposes four open questions, including how to deal with the smoothing problem caused by stacked multi-layer GNN, how to deal with dynamically changing graph structures, how to use common methods to deal with unstructured data and how to extend it to a larger scale. On the network.

The author also compiled a list of GNN papers:

https://github.com/thunlp/GNNPapers

The following is a partial excerpt from Xin Zhiyuan on this review.Click to read the original text to view arXiv papers.

Original GNN and its limitations

The concept of GNN is first and foremost in the paper by F. Scarselli et al.The graph neural network model(F. Scarselli et. al. 2009). Here, we describe the original GNN and list the limitations of the original GNN in terms of presentation capabilities and training efficiency.

Next, we introduce several different GNN variants with different graph types, using different propagation functions and training methods.

Finally, we introduce three general frameworks, namely message passing neural network (MPNN), non-local neural network (NLNN), and graph network (GN). MPNN combines various graph neural networks and graph convolutional network methods; NLNN combines several "self-attention"The type of method; and the graph network GN can summarize almost all of the graph neural network variants mentioned in this article.

Graph neural network

As mentioned earlier, the concept of graph neural network (GNN) was first proposed by Scarselli et al. in 2009, which extends the existing neural network for processing the data represented in graphs. In the figure, each node is defined by its characteristics and related nodes.

Although the experimental results show that GNN isA powerful architecture for modeling structured dataHowever, the original GNN still has some limitations.

First, for a fixed node, the hidden state of the original GNN iteratively updating the node is inefficient. If we relax the assumption of a fixed point, we can design a multi-layered GNN to get a stable representation of the node and its neighborhood.

Second, GNN uses the same parameters in iterations, and most popular neural networks use different parameters in different layers, which is a hierarchical feature extraction method. In addition, the update of the node hidden state is a sequential process that can beRNNKernel (such as GRU and LSTMBenefit from).

Third, there are also some information features on the side that cannot be modeled in the original GNN. In addition, how to learn the hidden state of the side is also an important issue.

Finally, if we focus on the representation of the node instead of the graph, it is not appropriate to use a fixed point because the distribution of representations at the fixed point is numerically smooth, and the amount of information that distinguishes each node is also small. .

Variant of graph neural network

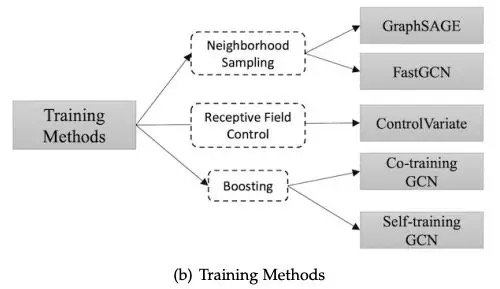

In this section, we propose several variants of the graph neural network. The first is variants that run on different graph types that extend the presentation capabilities of the original model. Second, we list several variants of the propagation steps (convolution, gate mechanism, attention mechanism, and skip connection) that can be better learned. Finally, we describe the titles that use advanced training methods that improve training efficiency.

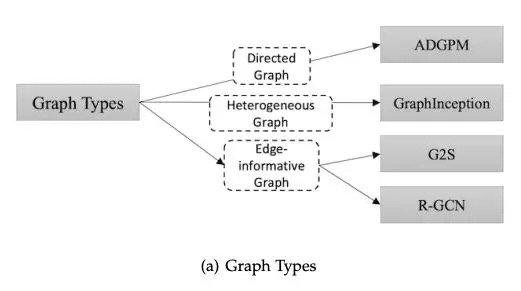

Figure 2 outlines the different variants of GNN.

Graph Types

In the original GNN, the input graph consists of nodes with label information and undirected edges, which is the simplest graphical format. However, there are many different graphics in the world. Here, we will introduce some methods for modeling different types of graphics.

- Directed Graphs

The first variant of the graph is a directed graph. An undirected edge can be thought of as two directed edges, indicating that there is a relationship between the two nodes. However, the directed edge can bring more information than the undirected edge. For example, in a knowledge graph, the edge starts from the head entity and ends with the tail entity. The head entity is the parent class of the tail entity, which indicates that we should treat the information propagation process of the parent class and the child class differently. An example of a directed graph is ADGPM (M. Kampffmeyer et. al. 2018).

- Heterogeneous Graphs

The second variant of the graph is a heterogeneous graph with several types of nodes. The easiest way to handle heterogeneous graphs is to convert each node's type to a one-hot feature vector that is joined to the original feature. Heterogeneous graphs such as GraphInception.

- Edge-informative Graph

Another variation of the graph is that each edge has information, such as a weight or a type of edge. For example G2S and R-GCN.

Graph variants using different training methods

GNN variants modified at the propagation step

GNN's three general frameworks

In addition to the different variants of the graph neural network, we have introduced several general frameworks designed to integrate different models into one framework.

J. Gilmer et al. (J. Gilmer et. al. 2017) proposedMessaging neural network(message passed neural network, MPNN), unified various methods of graph neural network and graph convolution network.

X. Wang et al. (X. Wang et. al. 2017) proposedNon-local neural network(non-local neural network, NLNN), which combines several "self-attention" style methods.

PW Battaglia et al. (PW Battaglia et. al. 2018) proposedGraph network(graph network, GN), which unifies the unified MPNN and NLNN methods and many other variants, such as Interaction Networks, Neural Physics Engine, CommNet, structure2vec, GGNN, Relational Network (Relation Network) ), Deep Sets and Point Net.

Several unresolved issues

Despite the great success of GNN in different areas, it is worth noting that the GNN model does not provide a satisfactory solution for any graph task under any conditions. Here, we will present some open questions for further study.

Shallow structure

Traditional deep neural networks can stack hundreds of layers for better performance, because deeper structures have more parameters and can significantly improve the expressiveness of the network. However, GNN is always very shallow, and most do not exceed three layers.

Experiments have shown that stacking multiple GCN layers will result in excessive smoothing, that is, all vertices will converge to the same value. Although some researchers have managed to solve this problem, it is still the biggest limitation of GNN. Designing a true depth GNN is an exciting challenge for future research and will make a significant contribution to further understanding of GNN.

Another challenging issue with dynamic graphics is how to handle graphics with dynamic structures. Static graphs are always stable, so modeling them is feasible, while dynamic graphs introduce changing structures. When edges and nodes appear or disappear, the GNN cannot make changes adaptively. The current research on dynamic GNN is also actively underway, and we believe it is an important milestone for the stability and adaptability of general GNN.

Unstructured scene

We discussed the use of GNN in non-structural scenarios, but we did not find the best way to generate graphs from raw data. In the image domain, some research can be utilizedCNNThe feature map is obtained, then it is upsampled to form a super pixel as a node, and some directly use some object detection algorithms to acquire the object node. In the text field, some studies use syntactic trees as syntactic maps, and others use fully connected graphs. Therefore, the key is to find the best way to generate graphs, so that GNN can play a bigger role in a wider field.

Extensibility problem

How to apply embedded algorithms to large-scale network environments such as social networks or recommendation systems is a fatal problem faced by almost all graphics embedding algorithms, and GNN is no exception. Extending GNN is difficult because many of the core processes involved consume computational power in a big data environment.

This difficulty is reflected in several aspects: First, the graph data is not regular, each node has its own neighborhood structure, so it cannot be batch processed. Second, when the number of nodes and edges that exist reaches millions, it is not feasible to calculate the Laplacian of the graph. In addition, we need to point out that the scalability can determine whether the algorithm can be applied to the actual scene. There are already some studies that propose solutions to this problem, and we are paying close attention to these new developments.

in conclusion

In the past few years, GNN has become a powerful and practical tool for machine learning tasks in the field of graphics. This progress depends on expressiveness, model flexibility and advances in training algorithms. In this paper, we have a comprehensive overview of the graph neural network. For the GNN model, we introduce GNN variants classified by graph type, propagation type, and training type.

In addition, we have summarized several general frameworks that collectively represent different GNN variants. In terms of application classification, we divide the GNN application into structural scenes, unstructured scenes, and other 18 scenes, and then detail the applications in each scene. Finally, we propose four open questions, pointing out the main challenges of graph neural networks and future research directions, including model depth, scalability, dynamic graph processing, and processing capabilities for unstructured scenarios.

This article is transferred from the public number Xinzhiyuan,Original address

Comments