What kind of DL architecture should beginners use? Of course, the simpler the better, the faster the training, the better, the higher the test accuracy, the better! So, should we choose PyTorch or Keras?

Keras and PyTorch are certainly the most user-friendly deep learning frameworks for beginners. They are used like a simple language to describe the architecture, telling the framework which layer to use. This reduces a lot of abstract work, such as designing static calculation graphs, defining dimensions and content for each tensor, and so on.

But which framework is better? Of course, different developers and researchers will have different hobbies and different opinions. This paper compares PyTorch and Keras from the perspective of abstraction and performance, and introduces a new benchmark that reproduces and compares all pre-trained models of the two frameworks.

In the Keras and PyTorch benchmark projects, MIT reviewed the 34 pre-training models at Dr. Curtis G. Northcutt. The benchmark combines Keras and PyTorch and unifies them into a single framework so that we can see the comparison between the two frameworks and know what frameworks are used for different models. For example, the project author says ResNet The architecture model uses PyTorch better than Keras, and the Inception architecture model uses Keras better than PyTorch.

Keras and PyTorch benchmark projects: https://github.com/cgnorthcutt/benchmarking-keras-pytorch

Performance and ease of use of the two frameworks

As a highly encapsulated TensorFlow, Keras has a very high level of abstraction and many API details are hidden. Although PyTorch is easier to use than TensorFlow's static calculations, Keras hides more details in general. For performance, in fact, each framework will undergo a lot of optimization, and the difference is not obvious, nor will it be the main selection criterion.

Ease of use

Keras is a higher-level framework that encapsulates commonly used deep learning layers and operations into convenient building blocks and builds complex models like building blocks. Developers and researchers do not need to consider the complexity of deep learning.

PyTorch provides a relatively low-level experimental environment that gives users more freedom to write custom layers, view numerical optimization tasks, and more. For example, in PyTorch 1.0, the compilation tool torch.jit contains a language called Torch Script, which is a sub-language of Python that developers can use to further optimize the model.

We can define by simpleConvolutional networkLook at the ease of use of both:

model = Sequential()model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)))model.add(MaxPool2D())model.add(Conv2D(16, (3, 3), activation='relu'))model.add(MaxPool2D())model.add(Flatten())model.add(Dense(10, activation='softmax'))As shown above, Keras is defined in a way that many times the calculations are embedded as parameters in the API, so the code is very succinct. The following is the definition of PyTorch, which is generally defined by means of classes and instances, and many dimension parameters of specific operations need to be defined.

class Net(nn.Module): def __init__(self): super(Net, self).__init__() self.conv1 = nn.Conv2d(3, 32, 3) self.conv2 = nn.Conv2d(32, 16, 3) self.fc1 = nn.Linear(16 * 6 * 6, 10) self.pool = nn.MaxPool2d(2, 2) def forward(self, x): x = self.pool(F.relu(self.conv1(x))) x = self.pool(F.relu(self.conv2(x))) x = x.view(-1, 16 * 6 * 6) x = F.log_softmax(self.fc1(x), dim=-1) return xmodel = Net()Although Keras feels easier to use than PyTorch, the difference between the two is small, and it is expected that the model can be written more easily.

Performance

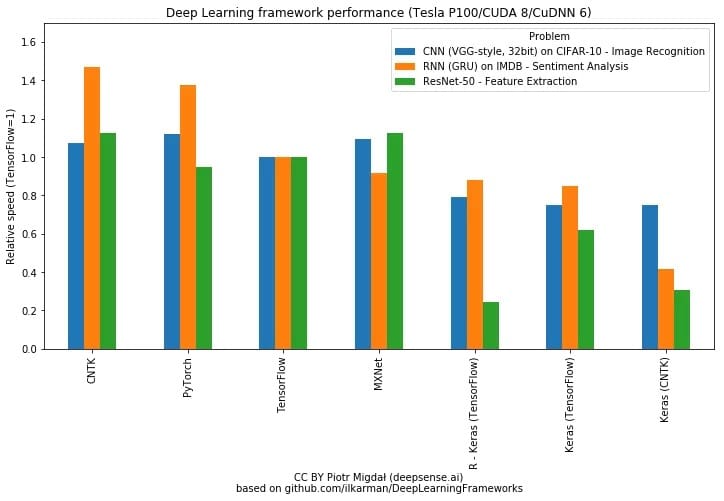

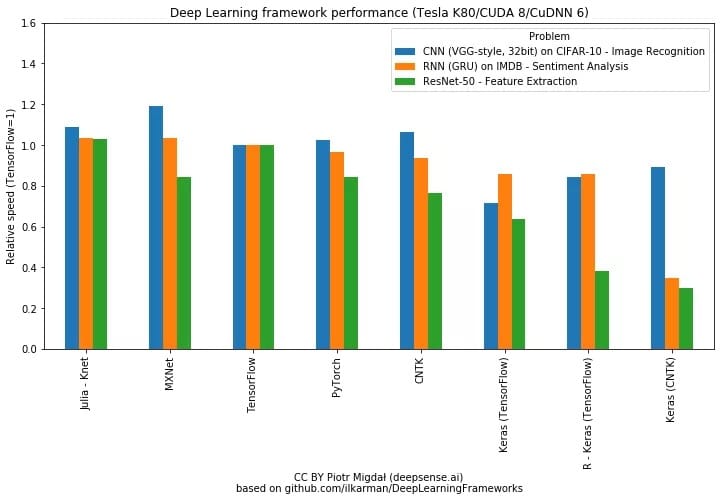

There are a lot of experiments comparing the performance of each frame to show that PyTorch's training speed is faster than Keras. The following two diagrams show the performance of different frameworks on different hardware and model types:

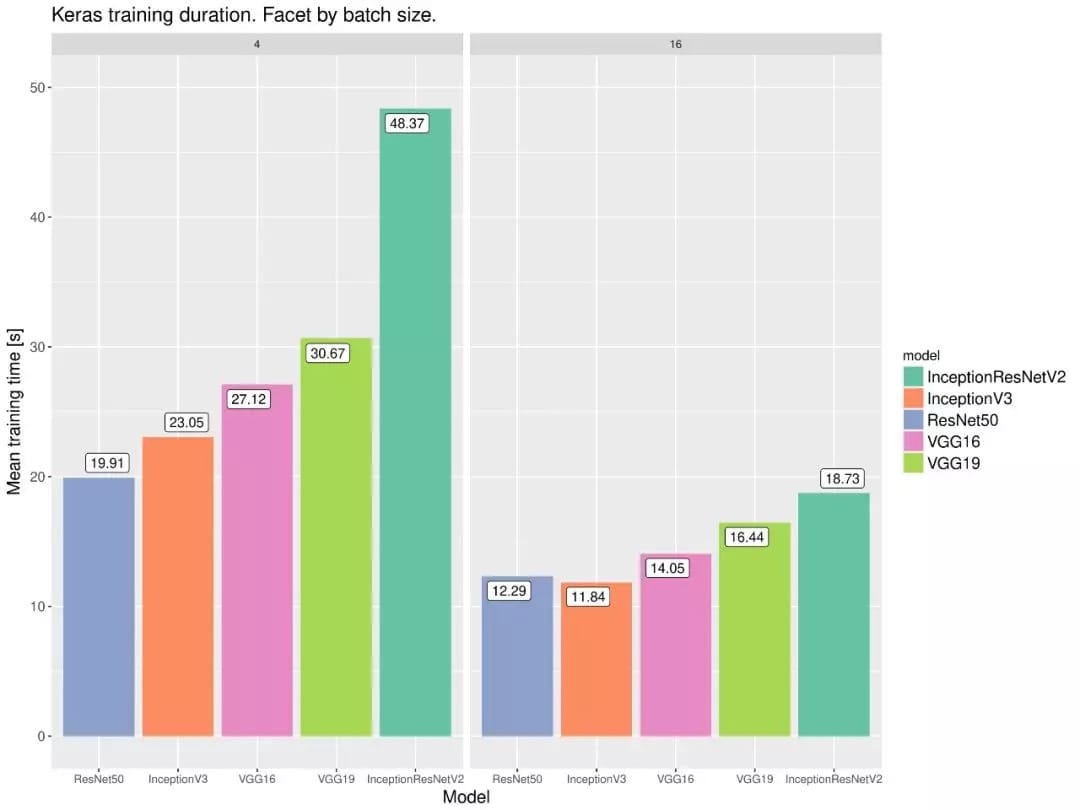

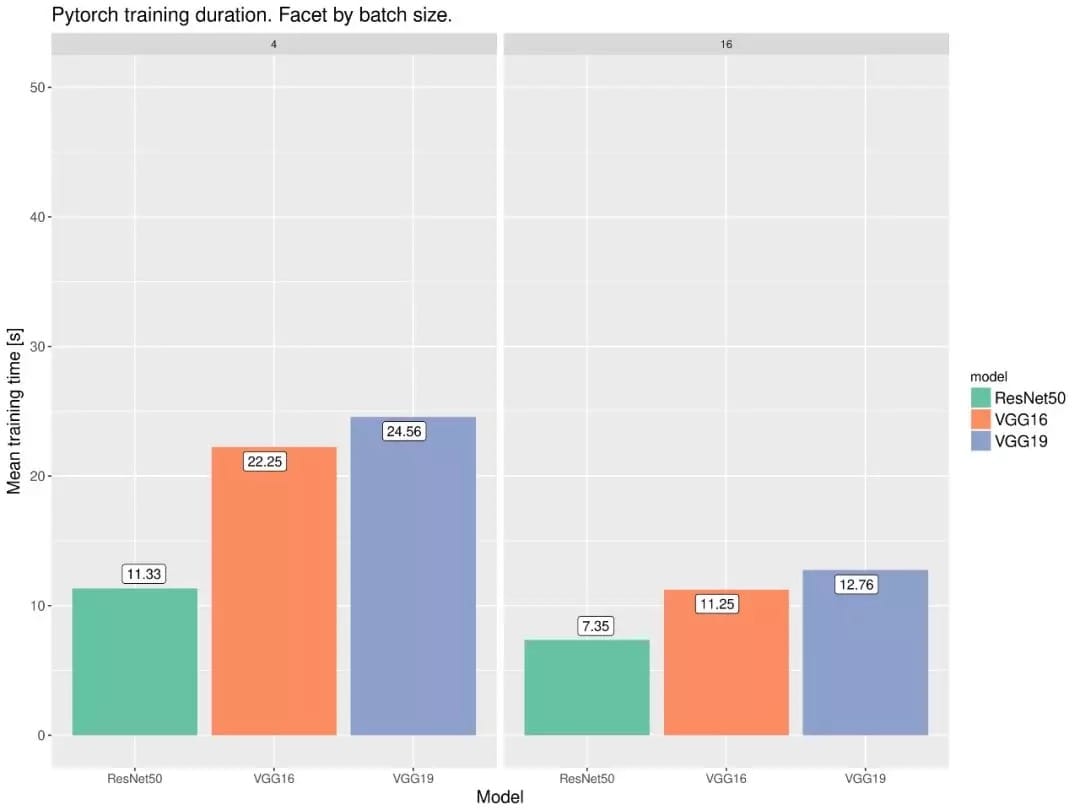

The following two also show the performance of different models under the PyTorch and Keras frameworks. Both 18 tests have shown that PyTorch is a little faster than Keras.

The two contrast details can be found at:

- https://github.com/ilkarman/DeepLearningFrameworks/

- https://wrosinski.github.io/deep-learning-frameworks/

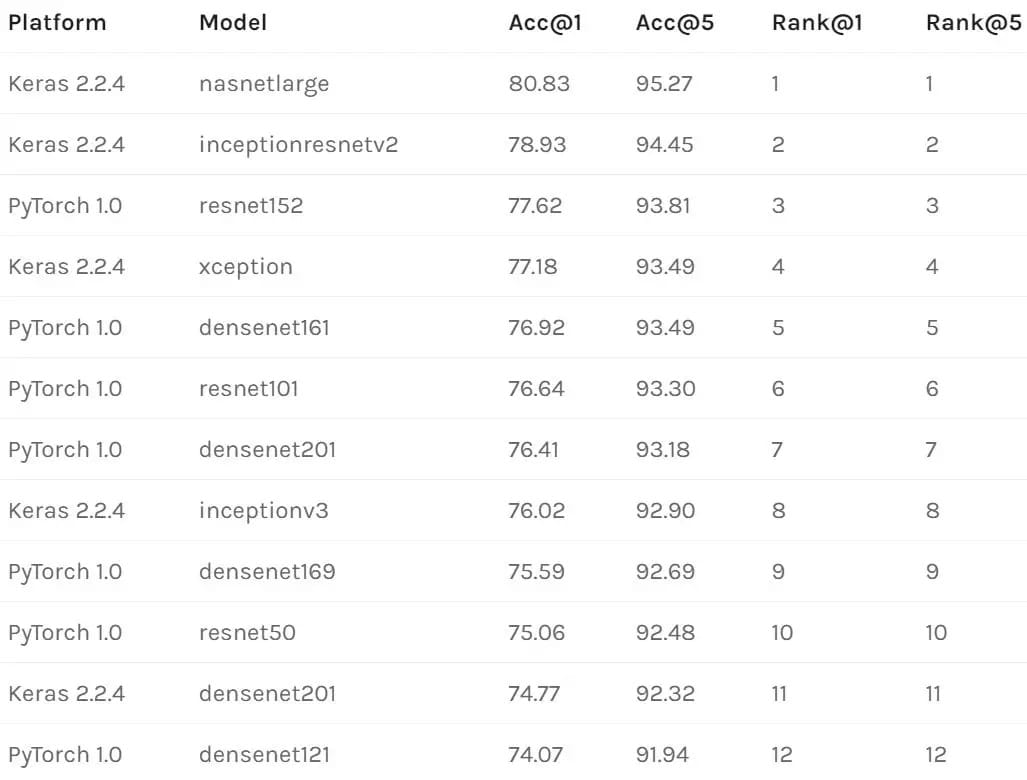

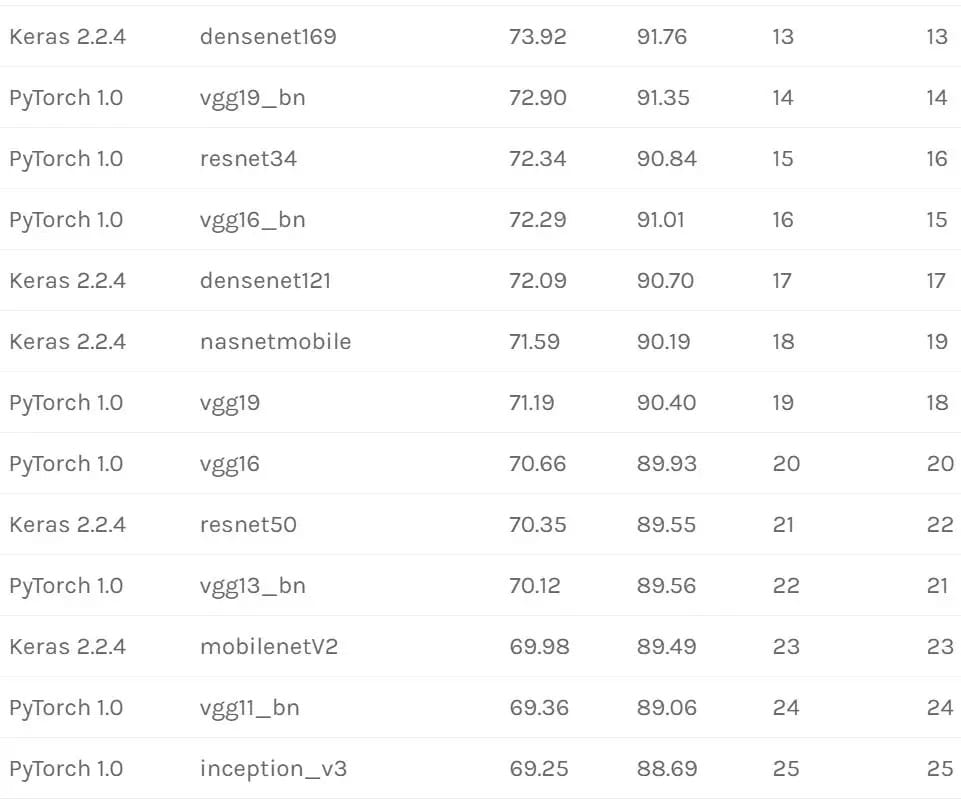

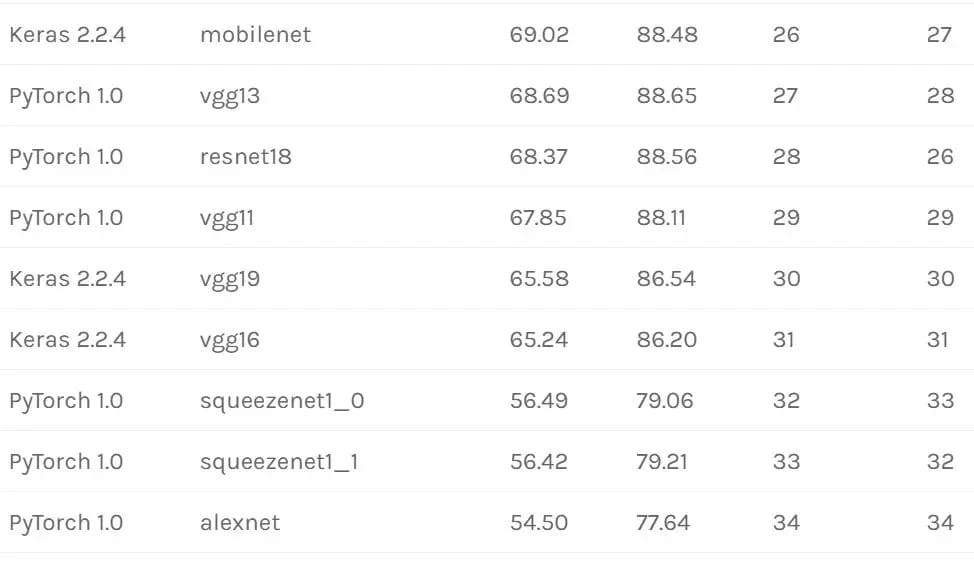

Keras and PyTorch Benchmark

Now if we look at the pre-training model, then the same model is on a different framework, what is the accuracy of the verification set? In this project, the author reproduces 34 pre-training models using two frameworks and gives the verification accuracy of all pre-training models. So the project can not only be used as a basis for comparison, but also as a learning resource. What better way to learn the classic model code directly?

Is the pre-training model already reproducible?

This is the case in PyTorch. However, some Keras users feel that it is very difficult to reproduce. The problems they encounter can be divided into three categories:

1. Cannot reproduce the benchmark results that Keras has published, even if the sample code is completely copied. In fact, the accuracy reported by them (up to 2019 in 2) is usually slightly higher than the actual accuracy.

2. Some pre-trained Keras models produce inconsistencies or lower accuracy when deployed to a server or running in parallel with other Keras models.

3. UseBatch normalizationThe (KN) model of (BN) may not be reliable. For some models, forward propagation assessment still leads to a change in weight in the inference phase.

These issues are real and the original GitHub project provides links to each question. One of the goals of the project authors is to help solve some of these problems by creating reproducible benchmarks for the Keras pre-training model. The solution can be divided into the following three aspects, which should be done in Keras:

- Avoid batching during reasoning.

This is very slow every time you run a sample, but you can get a reproducible output for each model.

Run the model only in a local function or with statement to ensure that nothing in the previous model is stored in memory when the next model is loaded.

Pre-training model recurring results

The following is the "real" validation set accuracy table for Keras and PyTorch (already validated on macOS 10.11.6, Linux Debian 9, and Ubuntu 18.04).

Recurrence method

First you need to download the ImageNet 2012 validation set, which contains 50000 images. After the ILSVRC2012_img_val.tar download is complete, run the following command line to preprocess/extract the validation set:

# Credit to Soumith: https://github.com/soumith/imagenet-multiGPU.torch$ cd ../ && mkdir val && mv ILSVRC2012_img_val.tar val/ && cd val && tar -xvf ILSVRC2012_img_val.tar$ wget -qO- https://raw.githubusercontent.com/soumith/imagenetloader.torch/master/valprep.sh | bashThe top 5 prediction for each example in the ImageNet validation set has been predicted, and running the following command line will use these precomputed results directly and reproduce the Keras and PyTorch benchmarks in a matter of seconds.

$ git clone https://github.com:cgnorthcutt/imagenet-benchmarking.git$ cd benchmarking-keras-pytorch$ python imagenet_benchmarking.py /path/to/imagenet_val_dataThe inferential output of each Keras and PyTorch can also be reproduced without using precomputed data. Keras's reasoning takes a long time (5-10 hours) because only one example of forward propagation is calculated at a time, and vector calculations are avoided. This is the only way to find out if you want to reliably reproduce the same accuracy. PyTorch's reasoning is very fast (not an hour). The complex modern code is as follows:

$ git clone https://github.com:cgnorthcutt/imagenet-benchmarking.git$ cd benchmarking-keras-pytorch$ # Compute outputs of PyTorch models (1 hour)$ ./imagenet_pytorch_get_predictions.py /path/to/imagenet_val_data$ # Compute outputs of Keras models (5-10 hours)$ ./imagenet_keras_get_predictions.py /path/to/imagenet_val_data$ # View benchmark results$ ./imagenet_benchmarking.py /path/to/imagenet_val_dataYou can control GPU Usage, batch size, output storage directory, etc. Run the -h flag to see the command line argument options.

This article is transferred from the heart of the machine,Original address

Comments